West Lake Bonney, Taylor Valley, Antarctica

Reporting from Blood Falls Basecamp

Today we decided to spread our wings a little bit and try a longer run. We had two goals to start with. The first was to make sure the navigation really is rock solid even over long distances, and the second was to try to find another piece of lost equipment that Peter thought might be waiting to snag us. These goals would take the bot 380 meters from the melt hole, which is actually the farthest we’ve ever sent the bot away from mission control, even including all of our previous tests in the States and the DepthX missions in Zacatón. In preparation for this rite of robot passage, Vickie swapped out the 400 meter long fiber optic line we had been using up to now for a 1000 m line. While normal fiber optic would sink and droop down to the halocline in Lake Bonney, this fiber optic has a special coating that allows it to float up to the ice ceiling. This way, when the bot spins in a circle or lowers the sonde, it won’t get wrapped up in the fiber.

Vickie unspools the kilometer-long fiber optic in preparation for the bot’s380-meter journey away from the melt hole today.

A destination point was entered into the bot’s navigation system and Endurance motored off while Peter, Bill, and Vickie tracked it from the surface. The target was the last known GPS location of an old ablation stake (essentially an ~8 meter long rod used to measure how much the lake ice ablates over time) that had disappeared into the ice in a previous year. Peter said there was a small chance that the stake could be sticking straight up out of the lake sediment and might serve as a fiber optic snag if we didn’t check it out first.

Vickie tracks the bot’s magnetic beacon using a loop antenna.

The GPS point we had turned out to be inaccurate by several meters but there was also a flag marking the spot on the surface so, since we were able to locate the bot from its magnetic beacon, Bill was able to report a heading and distance to Shilpa, Kristof, and Chris at mission control, and they were able to drive right to the spot, confirming that both navigation and beacon were working correctly. A wrench securely tied to some rope had been lowered down a small melt hole at the target so that, looking from the forward-looking camera, mission control would be able to confirm that they reached the spot. Reach it we did, and we proceeded to investigate the area, spinning the vehicle and using the forward-cam to look for the missing ablation stake. After some time spent hunting for the stake we decided to bring the bot home for the final test of the day: visual homing.

This image comes from the bot’s forward-looking camera. When we saw the wrench in the field of view,we knew that we had reached the flagged point on the surface successfully.

One of the interesting technological problems with working under a 3-meter ice cap is the question of how to get a 2 m x 2 m bot covered with delicate instruments back out of the melt hole you tossed it into. Coming from the DepthX project, we knew that the bot’s dead-reckoning navigation was quite good and could get us back to within a few meters of the melt hole. But short of manually driving the vehicle up the 3-meter tall melt hole, how could we get it to ascend at the right time? The answer was machine vision. We have an upward looking camera on the bot and above the center of the melt hole we have a 12 watt LED light that blinks on and off at a specific frequency. Shilpa worked with Aniket and Greg, two Stone Aerospace programmers back in Austin, to write a visual homing program that would start up when the bot approached the melt hole. It would use the camera to identify all of the light sources above it, pick out the bright light that blinked at the right frequency, lock onto that light, and then kick in the appropriate thrusters to center the vehicle under the light and follow it up to the surface. That is, if it worked.

We had tested the visual homing in our final bot tests over the summer and it appeared to work well in the wide open waters of NBL’s brobdingnagian tank. However, we had never been able to test how it would perform in a tall, narrow tube like the melt hole, where the consequences of running just a little off course meant hitting the wall. Shilpa urged us all to have faith in the program as we leaned over the railings around the melt hole, looking down and waiting for the bot. The orange edge of the bot’s syntactic became visible in the southwest quadrant of the melt hole. It started to pass under the hole, still under normal navigation. The regular pulse of the blinking light flashed off the water surface onto our faces. As the bot passed under the hole it stopped, shimmied a little to one side and then to the other, centering itself before ascending gracefully up the hole. The skin of the syntactic broke the surface of the water and the whole room cheered. Not only had the bot navigated to a target 380 meters away under a 3 meter ice cap, but it came home and, using the visual homing, popped straight up to the surface like all good robots should. We are beginning to feel like all of our hard work is paying off.

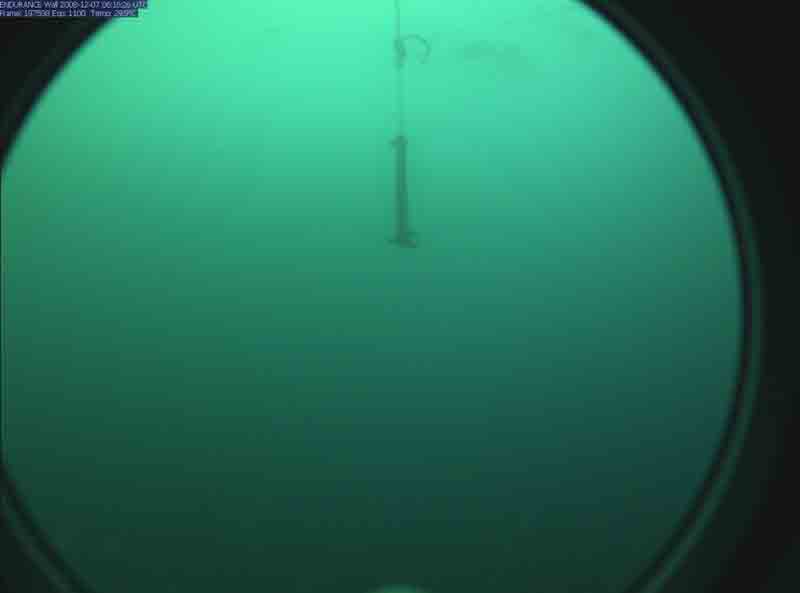

Video, recorded by the bot as it approached and ascended the melt hole:The bot moves along under the ice; the string wiggling above the camera is the fiber optic line.It reaches the edge of the melt hole, identifies the blinking light, locks onto it and ascends to a cheering crowd.

Reporting by Vickie Siegel